emerging-mind lab (EML)

eJournal ISSN 2567-6466

29.Nov. 2017

info@emerging-mind.org

Author: Gerd Doeben-Henisch

EMail: gerd@doeben-henisch.de

FRA-UAS – Frankfurt University of Applied Sciences

INM – Institute for New Media (Frankfurt, Germany)

SUMMARY

This small software package is a further step in the exercise to learn python3 while trying to solve a given theoretical problem. The logic behind this software can be described as follows:

- This software shall be an illustration to a simple case study from the uffmm.org site. The text of the case study is not yet finished, and this software will be extended further in the next weeks/ months…

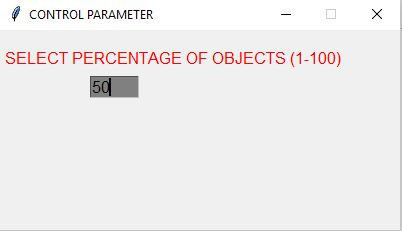

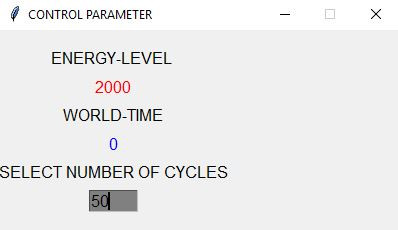

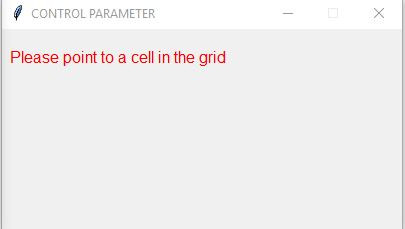

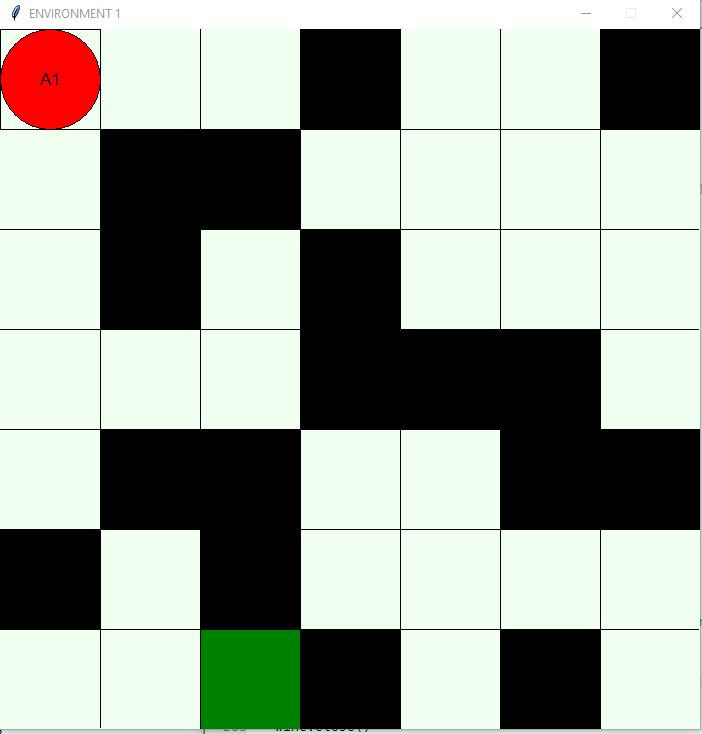

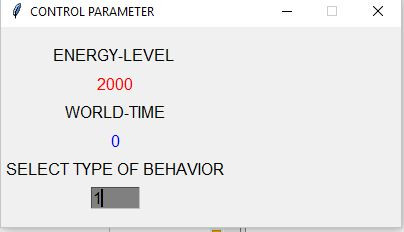

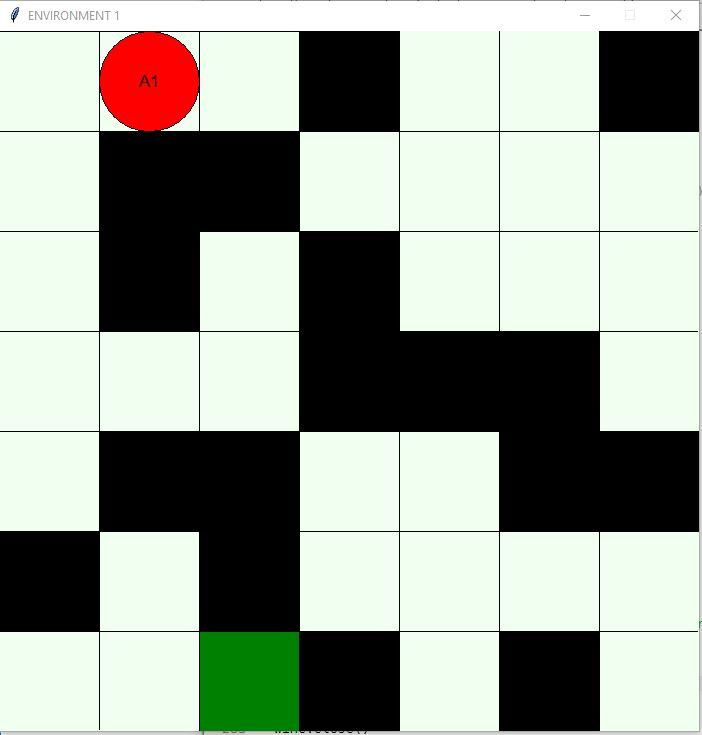

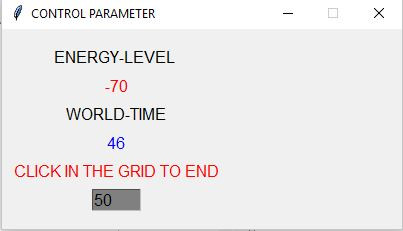

- The base-version of this software offers the user a menu-driven start to define a simple test-environment where he can investigate the behaviour of (yet) simple actors. At the end of a test run (every run can have n-many cycles, there can be m-many repetitions of a run) a simple graphic shows the summarized results.

- The actual actors are without any kind of perception, no memory, no computational intelligence, they are completely driven either by a fixed rule or by chance. But they are consuming energy which decreases during time and they will ‚die‘ if they can not find new energy.

- A more extended description of the software will follow apart from the case study as well as within the case study.

- The immediate next extensions will be examples of simple sensory models (smelling, tasting, touching, hearing, and viewing). Based on this some exercises will follow with simple memory structures, simple model-building capabilities, simple language constructs, making music, painting pictures, do some arithmetic. For this the scenario has to be extended that there are at least three actors.

- By the way, the main motivation for doing this is philosophy of science: exercising the construction of an emerging-mind where all used parts and methods are know. Real Intelligence can never be described by its parts only; it is an implicit function, which makes the ‚whole‘ different to the so-called ‚parts‘. As an side-effect there can be lots of interesting applications helping humans to become better humans 🙂 But, because we are free-acting systems, we can turn everything in ins opposite, turning something good into ‚evil’…